Peter Lobner

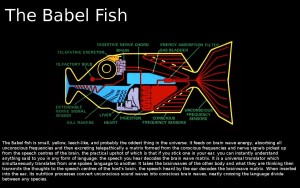

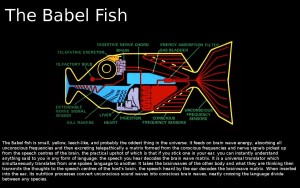

In Douglas Adams’ 1978 BBC radio series and 1979 novel, “The Hitchhiker’s Guide to the Galaxy,” we were introduced to the small, yellow, leach-like Babel fish, which feeds on brain wave energy.

Source: http://imgur.com/CZgjO

Source: http://imgur.com/CZgjO

Adams stated that, “The practical upshot of all this is that if you stick a Babel fish in your ear you can instantly understand anything in any form of language.”

In Gene Roddenberry’s original Star Trek series, a less compact, but, thankfully, inorganic, universal translator served Captain Kirk and the Enterprise crew well in their many encounters with alien life forms in the mid 2260s. You can see a hand-held version (looking a bit like a light saber) in the following photo from the 1967 episode, “Metamorphosis.”

Source: http://visiblesuns.blogspot.com/2014/01/star-trek-metamorphosis.html

Source: http://visiblesuns.blogspot.com/2014/01/star-trek-metamorphosis.html

A miniaturized universal translator built into each crewmember’s personal communicator soon replaced this version of the universal translator.

At the rate that machine translation technology is advancing here on Earth, its clear that we won’t have to wait very long for our own consumer-grade, portable, “semi-universal” translator that can deliver real-time audio translations of conversations in different languages.

Following is a brief overview of current machine translation tools:

BabelFish

If you just want a free on-line machine translation service, check out my old favorite, BabelFish, originally from SYSTRAN (1999), then Alta Vista (2003), then Yahoo (2003 – 2008), and today at the following link:

https://www.babelfish.com

With this tool, you can do the following:

- Translate any language into any one of 75 supported languages

- Translate entire web pages and blogs

- Translate full document formats such as Word, PDF and text

When I first was using BabelFish more than a decade ago, I often was surprised by the results of a reverse translation of the text I had just translated into Russian or French.

While BabelFish doesn’t support real-time, bilingual voice translations, it was an important, early machine translation engine that has evolved into a more capable, modern translation tool.

Google Translate

This is a machine translation service / application that you can access at the following link:

https://translate.google.com

Google Translate also is available as an IPhone or Android app and currently can translate text back and forth between any two of 92 languages.

Google Translate has several other very useful modes of operation, including, translating text appearing in an image, translating speech, and translating bilingual conversations.

- Translate image: You can translate text in images—either in a picture you’ve taken or imported, or just by pointing your camera.

- Translate speech: You can translate words or phrases by speaking. In some languages, you’ll also hear your translation spoken back to you.

- Translate bilingual conversation: You can use the app to talk with someone in a different language. You can designate the language or the Translate app will recognize which language is being spoken, thereby allowing you have a (more-or-less) natural conversation.

In a May 2014 paper by Haiying Li, Arthur C. Graesser and Zhiqiang Cai, entitled, “Comparison of Google Translation with Human Translation,” the authors investigated the accuracy of Google Chinese-to-English translations from the perspectives of formality and cohesion. The authors offered the following findings:

“…..it is possible to make a conclusion that Google translation is close to human translation at the semantic and pragmatic levels. However, at the syntactic level or the grammatical level, it needs improving. In other words, Google translation yields a decipherable and readable translation even if grammatical errors occur. Google translation provides a means for people who need a quick translation to acquire information. Thus, computers provide a fairly good performance at translating individual words and phrases, as well as more global cohesion, but not at translating complex sentences. “

You can read the complete paper at the following link:

https://www.aaai.org/ocs/index.php/FLAIRS/FLAIRS14/paper/viewFile/7864/7823

A December 2014 article by Sumant Patil and Patrick Davies, entitled, “Use of Google Translate in Medical Communication: Evaluation of Accuracy,” also pointed to current limitations in using machine translations. The authors examined the accuracy of translating 10 common medical phrases into 26 languages (8 Western European, 5 Eastern European, 11 Asian, and 2 African) and reported the following:

“Google Translate has only 57.7% accuracy when used for medical phrase translations and should not be trusted for important medical communications. However, it still remains the most easily available and free initial mode of communication between a doctor and patient when language is a barrier. Although caution is needed when life saving or legal communications are necessary, it can be a useful adjunct to human translation services when these are not available.”

The authors noted that translation accuracy depended on the language, with Swahili scoring lowest with only 10% correct, and Portuguese scoring highest at 90%.

You can read this article at the following link:

http://www.bmj.com/content/349/bmj.g7392

ImTranslator

ImTranslator, by Smart Link Corporation, is another machine translation service / tool, which you can find at the following link:

http://imtranslator.net

ImTranslator uses several machine translation engines, including Google Translate, Microsoft Translator, and Babylon Translator. One mode of ImTranslator operation is called, “Translate and Speak”, which delivers the following functionality:

“….translates texts from 52 languages into 10 voice-supported languages. This … tool is smart enough to detect the language of the text submitted for translation, translate into voice, modify the speed of the voice, and even create an audio link to send a voiced message.”

I’ve done a few basic tests with Translate and Speak and found that it works well with simple sentences.

In conclusion

Machine translation has advanced tremendously over the past decade and improved translation engines are the key for making a universal translator a reality. Coupled with cloud-based resources and powerful smart phone apps, Google Translate is able to deliver an “initial operating capability” (IOC) for a consumer-grade, real-time, bilingual voice translator.

This technology is out of the lab, rapidly improving based on broad experience from performing billions of translations, and seeking commercial applications. Surely in the next decade, we’ll be listening through our ear buds and understanding spoken foreign languages with good accuracy in multi-lingual environments. Making this capability “universal” (at least on Earth) will be a challenge for the developers, but a decade is a long time in this type of technology business.

There may be a downside to the widespread use of real-time universal translation devices. In “The Hitchhiker’s Guide to the Galaxy,” Douglas Adams noted:

“…..the poor Babel fish, by effectively removing all barriers to communication between different races and cultures, has caused more and bloodier wars than anything else in the history of creation.”

Perhaps foreseeing this possibility, Google Translate includes an “offensive word filter” that doesn’t allow you to translate offensive words by speaking. As you might guess, the app has a menu setting that allows the user to turn off the offensive word filter. Trusting that people always will think before speaking into their unfiltered universal translators may be wishful thinking.

19 May 2016 Update:

Thanks to Teresa Marshall for bringing to my attention the in-ear, real-time translation device named Pilot, which was developed by the U.S. firm Waverly Labs. For all appearances, Pilot is almost an electronic incarnation of the organic Babel Fish. The initial version of Pilot uses two Bluetooth earbuds (one for you, and one for the person you’re talking to in a different language) and an app that runs locally on your smartphone without requiring web access. The app mediates the conversation in real-time (with a slight processing delay), enabling each user to hear the conversation in their chosen language.

Photo credit: Waverly Labs

Photo credit: Waverly Labs

As you might guess, the initial version of Pilot will work with the popular Romance languages (i.e., French, Spanish, etc.), with a broader language handling capability coming in later releases.

Check out the short video from Waverly Labs at the following link:

https://www.youtube.com/watch?v=VO-naxKNuzQ

I can imagine that Waverly Labs will develop the capability for the Pilot app to listen to a nearby conversation and provide a translation to one or more users on paired Bluetooth earbuds. This would be a useful tool for international travelers (i.e., on a museum tour in a foreign language) and spies.

You can find more information on Waverly Labs at the following link:

http://www.waverlylabs.com

Developing the more advanced technology to provide real-time translations in a noisy crowd with multiple, overlapping speakers will take more time, but at the rate that real-time translation technology is developing, we may be surprised by how quickly advanced translation products enter the market.

Summit supercomputer. Source: NVIDIA

Summit supercomputer. Source: NVIDIA Sierra supercomputer. Source: Lawrence Livermore National Laboratory / Randy Wong

Sierra supercomputer. Source: Lawrence Livermore National Laboratory / Randy Wong Source: LANL

Source: LANL

Source:

Source: