Peter Lobner

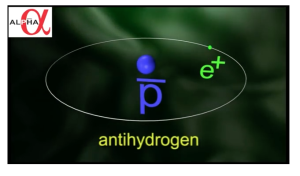

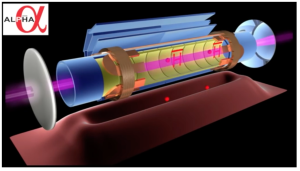

ALPHA-2 is a device at the European particle physics laboratory at CERN, in Meyrin, Switzerland used for collecting and analyzing antimatter, or more specifically, antihydrogen. A common hydrogen atom is composed of an electron and proton. In contrast, an antihydrogen atom is made up of a positron bound to an antiproton.

The ALPHA-2 project homepage is at the following link:

On 16 December 2016, the ALPHA-2 team reported the first ever optical spectroscopic observation of the 1S-2S (ground state – 1st excited state) transition of antihydrogen that had been trapped and excited by a laser.

“This is the first time a spectral line has been observed in antimatter. ……..This first result implies that the 1S-2S transition in hydrogen and antihydrogen are not too different, and the next steps are to measure the transition’s lineshape and increase the precision of the measurement.”

In the ALPHA-2 online news article, “Observation of the 1S-2S Transition in Trapped Antihydrogen Published in Nature,” you will find two short videos explaining how this experiment was conducted:

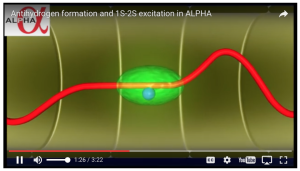

- Antihydrogen formation and 1S-2S excitation in ALPHA

- ALPHA first ever optical spectroscopy of a pure anti atom

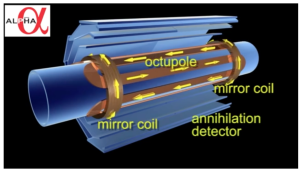

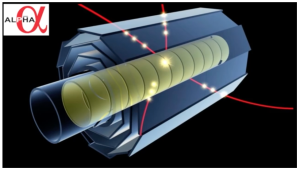

These videos describe the process for creating antihydrogen within a magnetic trap (octupole & mirror coils) containing positrons and antiprotons. Selected screenshots from the first video are reproduced below to illustrate the process of creating and exciting antihydrogen and measuring the results.

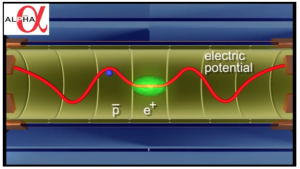

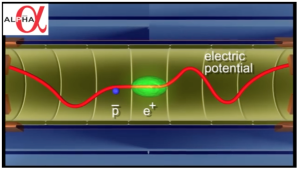

The potentials along the trap are manipulated to allow the initially separated positron and antiproton populations to combine, interact and form antihydrogen.

If the magnetic trap is turned off, the antihydrogen atoms will drift into the inner wall of the device and immediately be annihilated, releasing pions that are detected by the “annihilation detectors” surrounding the magnetic trap. This 3-layer detector provides a means for counting antihydrogen atoms.

A tuned laser is used to excite the antihydrogen atoms in the magnetic trap from the 1S (ground) state to the 2S (first excited) state. The interaction of the laser with the antihydrogen atoms is determined by counting the number of free antiprotons annihilating after photo ionization (an excited antihydrogen atom loses its positron) and counting all remaining antihydrogen atoms. Two cases were investigated: (1) laser tuned for resonance of the 1S-2S transition, and (2) laser detuned, not at resonance frequency. The observed differences between these two cases confirmed that, “the on-resonance laser light is interacting with the antihydrogen atoms via their 1S-2S transition.”

The ALPHA-2 team reported that the accuracy of the current antihydrogen measurement of the 1S-2S transition is about “a few parts in 10 billion” (1010). In comparison, this transition in common hydrogen has been measured to an accuracy of “a few parts in a thousand trillion” (1015).

For more information, see the 19 December 2016 article by Adrian Cho, “Deep probe of antimatter puts Einstein’s special relativity to the test,” which is posted on the Sciencemag.org website at the following link:

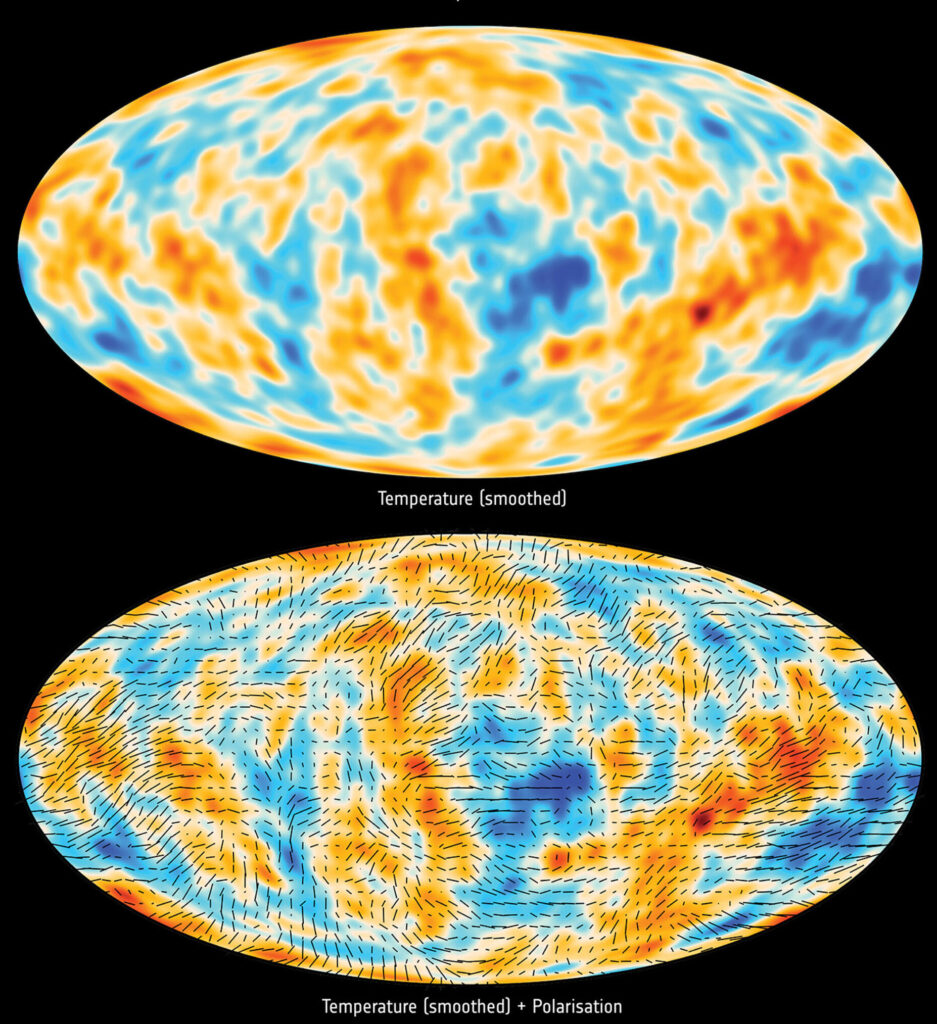

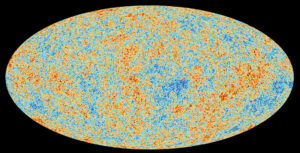

Planck all-sky survey 2013 CBM temperature map shows anisotropies in the temperature of the CMB at the full resolution obtained by Planck. Source: ESA / Planck Collaboration

Planck all-sky survey 2013 CBM temperature map shows anisotropies in the temperature of the CMB at the full resolution obtained by Planck. Source: ESA / Planck Collaboration